A new patent (number ) for a "Sensor Based Display Environment" at the U.S. Patent & Trademark Office shows that Apple is working on a motion-based 3D interface for its iOS devices. Although, from reading the patent, there seems to be no reason why the technology couldn't also be implemented on the Mac.

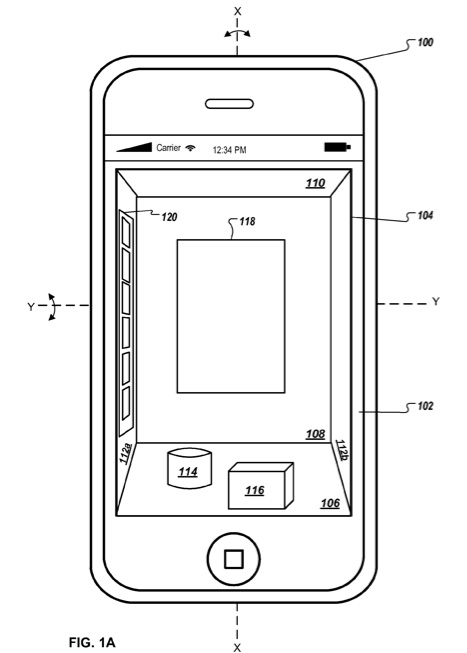

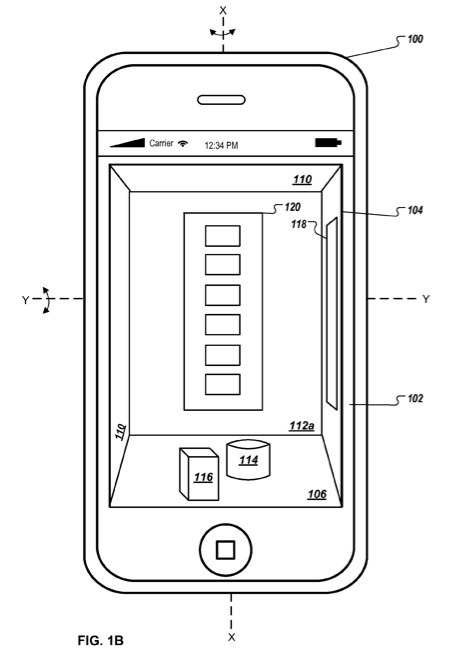

According to the patent, a three-dimensional display environment for mobile device is disclosed that uses orientation data from one or more onboard sensors to automatically determine and display a perspective projection of the 3D display environment based on the orientation data without the user physically interacting with (e.g., touching) the display. Patrick Piedmonte is the inventor.

Here's Apple's background and summary of the invention: "Modern computer operating systems often provide a desktop graphical user interface ('GUI') for displaying various graphical objects. Some examples of graphical objects include windows, taskbars, docks, menus and various icons for representing documents, folders and applications.

"A user can interact with the desktop using a mouse, trackball, track pad or other known pointing device. If the GUI is touch sensitive, then a stylus or one or more fingers can be used to interact with the desktop. A desktop GUI can be two-dimensional (2D) or three-dimensional (3D).

Modern mobile devices typically include a variety of onboard sensors for sensing the orientation of the mobile device with respect to a reference coordinate frame. For example, a graphics processor on the mobile device can display a GUI in landscape mode or portrait mode based on the orientation of the mobile device.

"Due to the limited size of the typical display of a mobile device, a 3D GUI can be difficult to navigate using conventional means, such as a finger or stylus. For example, to view different perspectives of the 3D GUI, two hands are often needed: one hand to hold the mobile device and the other hand to manipulate the GUI into a new 3D perspective.

"A 3D display environment for mobile device is disclosed that uses orientation data from one or more onboard sensors to automatically determine and display a perspective projection of the 3D display environment based on the orientation data without the user physically interacting with (e.g., touching) the display. In some implementations, the display environment can be changed based on gestures made a distance above a touch sensitive display that incorporates proximity sensor arrays.

"In some implementations, a computer-implemented method is performed by one or more processors onboard a handheld mobile device. The method includes generating a 3D display environment; receiving first sensor data from one or more sensors onboard the mobile device, where the first sensor data is indicative of a first orientation of the mobile device relative to a reference coordinate frame; determining a first perspective projection of the 3D display environment based on the first sensor data; displaying the first perspective projection of the 3D display environment on a display of the mobile device; receiving second sensor data from the one or more sensors onboard the mobile device, where the second sensor data is indicative of a second orientation of the mobile device relative to the reference coordinate frame; determining a second perspective projection of the 3D display environment based on the second sensor data; and displaying the second perspective projection of the 3D display environment on the display of the mobile device."